MultiDevice Environments: Studying Group Collaboration in Multi-Device Environments

Team:

Lauren Westendorf, Diana Tosca, Midori Yang, Andrew Kun, Orit Shaer

Publications:

- Lauren Westendorf, Orit Shaer, Petra Varsanyi, Hidde van der Meulen, and Andrew L. Kun. 2017. Understanding Collaborative Decision Making Around a Large-Scale Interactive Tabletop. Proc. ACM Hum.-Comput. Interact. 1, CSCW, Article 110 (December 2017), 21 pages. DOI: https://doi.org/10.1145/3134745

- Hidde van der Meulen, Andrew L. Kun, and Orit Shaer. 2017. What Are We Missing?: Adding Eye-Tracking to the HoloLens to Improve Gaze Estimation Accuracy. In Proceedings of the 2017 ACM International Conference on Interactive Surfaces and Spaces (ISS '17). ACM, New York, NY, USA, 396-400. DOI: https://doi.org/10.1145/3132272.3132278

- Hidde van der Meulen, Petra Varsanyi, Lauren Westendorf, Andrew L. Kun, Orit Shaer Towards understanding collaboration around interactive surfaces: Exploring joint visual attention. Posters UIST 2016.

- Orit Shaer, Consuelo Valdes, Casey Grote, Wendy Xu, Taili Feng Enhancing Data-Driven Collaboration with Large-Scale Interactive Tabletops. ACM CHI 2013.

Project Brief:

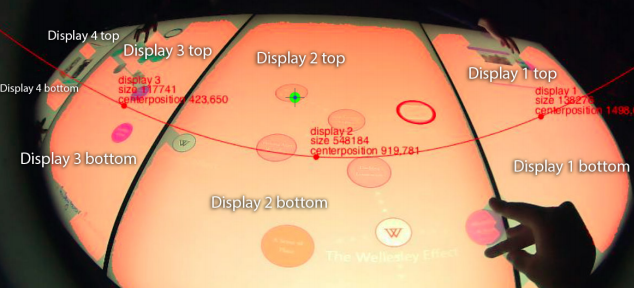

This collaboration project with the University of New Hampshire seeks to gain deep understanding of how different design characteristics of large-scale multi-device environments affect users’ ability to collaborate. We will examine an environment, which integrates multi touch interactive wall with a large tabletop display and personal devices. We will use eye tracking to study visual behavior of users while working in a multi-device environment.

For individuals, we want to understand how design decisions will influence their ability to consume information. Will they notice visual information? How long will they look at it before acting upon it? What are the areas of interest for users, and what are the patterns of shifting visual attention between these areas? What are the patterns of visual attention before acting upon information?

For user groups, we need to understand how design decisions impact the joint visual attention of multiple users. What is the relationship between gaze locations for multiple users, both in time and space? Do users who collaborate on a (sub)task view the same visual targets during the collaborative effort? Which people does a user look at upon acting on the information? Are there collaborative efforts where users divide visual tasks to improve task performance? And how do the visual behaviors depend on the number of users, their relationships (e.g. members of the same team, or only slightly familiar with each other) and the characteristics of the environment?